Making Robots Better Listeners: How Purdue Research Is Redefining Human–Robot Interaction

As robots move beyond industrial settings into classrooms, hospitals, and everyday environments, one of the biggest challenges isn’t mobility or autonomy—it’s social intelligence. Purdue University researcher Sooyeon Jeong is working to address that gap by designing robots that communicate more naturally and support humans in meaningful ways.

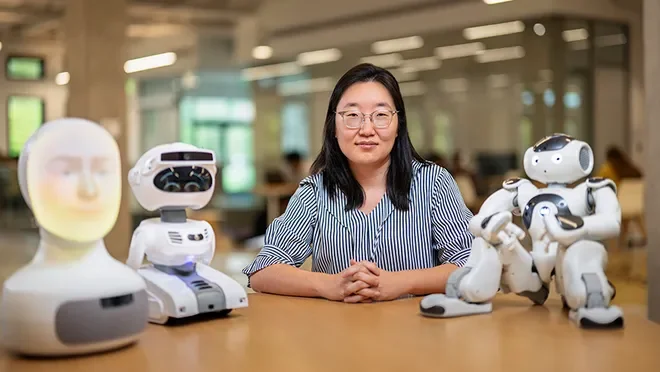

Jeong, an assistant professor of computer science, leads a research lab focused on the intersection of human behavior and artificial intelligence. Her work explores how robots can better understand emotional cues, engage in conversation, and collaborate with people across a range of settings, from healthcare to education.

Robots as Study Partners

One of Jeong’s recent projects examines how robots might serve as study companions, helping students maintain focus and motivation. While traditional productivity tools often rely on restrictions—such as blocking distractions—her team explored whether robots could instead provide encouragement, accountability, and emotional support.

Researchers tested several interaction models. In one scenario, the robot simply worked alongside the student, creating a sense of shared activity. In another, it provided periodic reminders about goals and progress. A third approach added emotional reinforcement, offering encouragement and suggesting breaks or movement to maintain productivity.

Results showed no single strategy worked for everyone. The most effective approach varied based on personality, mood, and subject matter. Some participants preferred gentle encouragement, while others wanted stricter accountability—highlighting the need for adaptive systems capable of responding to individual preferences in real time.

Designing More Relatable Robots

Jeong’s broader research addresses a fundamental challenge in robotics: making machines feel less transactional and more socially responsive.

Current voice assistants typically operate through short, question-and-answer exchanges. Human conversation, however, relies heavily on subtle signals—nods, affirmations, tone matching, and other “backchannels” that signal attention and understanding. Jeong’s team is developing methods to teach robots these behaviors using large language models and recordings of human interactions.

The goal is to create robots capable of empathetic listening and active engagement, enabling more natural communication in healthcare, therapy, and educational environments. Early work suggests that when robots demonstrate social awareness, users feel more comfortable and are more likely to benefit from the interaction.

Toward Socially Intelligent Physical AI

Jeong’s research reflects a broader shift in robotics toward systems that integrate physical capability with social and emotional intelligence. As robots increasingly operate in shared human spaces, their effectiveness may depend as much on communication design as on mechanical performance.

Her work is part of Purdue Computes, a multidisciplinary initiative advancing computing, physical AI, and emerging technologies. The research highlights an emerging reality: the next generation of robots will not only need to see and move—they will need to listen, respond, and build trust.